openstack 虚机创建流程以及源码分析(一)

基于openstack stein

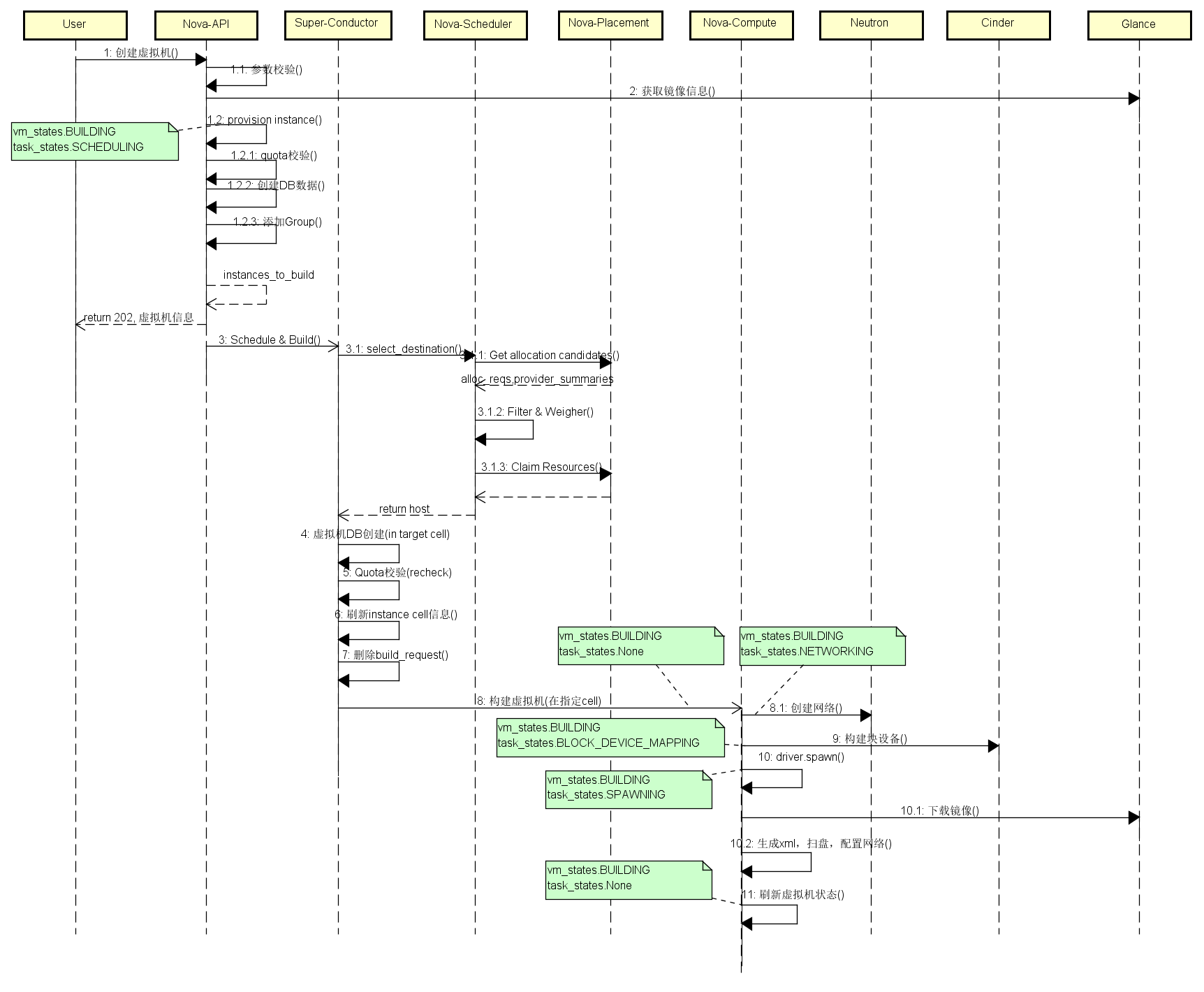

虚机创建流程图

上图是虚拟机创建流程的整体流程,可以看到整体虚拟机创建流程一次经过了API、Conductor、Scheduler、Placement、Compute等主要服务。

下表列出了每个步骤中实例的状态:

| Status | Task | Power state | Steps |

|---|---|---|---|

| Build | scheduling | None | 3-12 |

| Build | networking | None | 22-24 |

| Build | block_device_mapping | None | 25-27 |

| Build | spawing | None | 28 |

| Active | none | Running |

首先对从HTTP请求的获取的req和body中的相关信息进行验证,验证完成后调用nova-api的create函数。

1 | #nova/api/openstack/compute/servers.py #ServersController |

其中nova-api有两种类型,即根据配置参数进行选择self.compute_api的类型。如下:

1 | #nova/compute/__init__.py |

即根据/etc/nova/nova.conf配置文件cells字段下面的enable参数来设置nova-api的类型,由于我们的环境采用默认配置,stein版本默认cell v2就是打开的,即enable=true,所以get_cell_type函数将返回api 值,因此nova-api为nova.compute.cells_api.ComputeCellsAPI,即self.compute_api为nova.compute.cells_api.ComputeCellsAPI对象。所以将会调用nova.compute.cells_api.ComputeCellsAPI中的create函数。

1 | #nova/compute/cells_api.py |

nova.compute.cells_api.ComputeCellsAPI中的create函数, 这里默认调用的父类 nova.compute.api中的create函数。

1 | #nova/compute/api.py |

1 | #nova/compute/api.py |

这里调用 _create_instance创建虚拟机,nova-api 在数据库中 为新实例创建一个数据库条目,最终调用 compute_task_api.build_instances 创建虚拟机

1 | #nova/conductor/api.py |

消息调用conductor的rpcapi 接口从而传给conductor,让它来build instance

1 | #nova/api/openstack/server.py |

为什么通过RPC的cast调用就会调用到该位置的build_instances函数呢?这是因为在nova-conductor服务启动时,会去创建相关的RPC-server,而这些RPC-server创建时候将会去指定一些endpoints(与keystone中的endpoints含义不同,这里只是名称相同而已),而这些endpoints中包括一些对象列表,当RPC-client去调用相应的RPC-server中的函数时,则会在这些endpoints的对象列表中进行查找,然后调用相应的函数,具体可以看下 #/nova/service.py:Service start 流程, 更加详细的可以查询openstack rpc相关。

1 | #nova/conductor/manager.py |

第一次不会重新调度,调用 _schedule_instances 里的 self.query_client.select_destinations 选择计算节点,conductor调用scheduler client 发起rpc请求

1 | #nova/scheduler/client/query.py |

1 | #nova/scheduler/rpcapi.py |

消息通过rpc请求发给了scheduler

1 | #nova/scheduler/manager.py |

scheduler选择目标节点时会查询placement选择合适候选人 , 再调用 scheduler自身的filter 选择节点 self.driver.select_destinations

1 | #nova/scheduler/filter_scheduler.py |

1 | def _schedule(self, context, spec_obj, instance_uuids, |

获取前面placement 候选人 resource provider的主机状态信息, select_destinations 调度选择好计算节点