查询表中重复数据,展示出字段内容和count

1 | > select user_name,count(*) as count from user group by user_name having count>1; |

查询表中重复数据,展示出字段内容和count

1 | > select user_name,count(*) as count from user group by user_name having count>1; |

openstack ironic 裸金属创建裸机流程源码分析

基于 ironic stein

ironic-api接收到了provision_state的设置请求,然后返回202的异步请求,api请求到了ironic.api.controllers.v1.node.NodeStatesController的provision方法, 然后调用了 _do_provision_action 方法 。

1 | @METRICS.timer('NodeStatesController.provision') |

1 | def _do_provision_action(self, rpc_node, target, configdrive=None, |

然后RPC调用的ironic.condutor.manager.ConductorManager.do_node_deploy方法, 最终启动一个task ,调用 ironic.condutor.manager.do_node_deploy方法, 如果配置了configdrive会传至swift接口,然后调用 task.driver.deploy.prepare(task) 过程, 不同驱动的动作不一样 pxe_* 驱动使用的是iscsi_deploy.ISCSIDeploy.prepare,本地用的 task.driver.deploy.deploy

1 | @METRICS.timer('ConductorManager.do_node_deploy') |

1 | @METRICS.timer('do_node_deploy') |

如果是iSCSI启动,task.driver.deploy.prepare(task) 调用 iscsi_deploy.py 的 prepare(task), 初次调用 task.driver.boot.prepare_instance(task) , 配置dhcp ,准备pxe config和缓存镜像, 通过ipmi操控bios配置启动设备

1 | ***** |

默认本地启动调用 ironic.drivers.modules.pxe.PXERamdiskDeploy.prepare中会检查boot_option是否正确, 然后调用 populate_storage_driver_internal_info,如果使用iSCSI启动,保存boot的volume 信息至internal_info , 最终调用 调用 ironic.drivers.modules.pxe.PXEBoot.prepare_instance 启动部署

1 | @METRICS.timer('RamdiskDeploy.prepare') |

调用 ironic.drivers.modules.pxe.PXEBoot.prepare_instance, 配置dhcp ,准备pxe config和缓存镜像, 通过ipmi操控bios配置启动设备

1 | **** |

调用task.deploy

1 | ***** |

如果是iSCSI启动直接调用了 iscsi_deploy.ISCSIDeploy.deploy, cinder盘,不需要缓存镜像,所以,关机,切换租户网络,开机

1 | ***** |

本地盘 调用pxe.PXEBoot.deploy启动deploy会关机,配置租户网络, 再开机

1 | ***** |

至此deploy完,本地盘会进入ramdisk, iSCSI启动不走ramdisk会直接进去系统,

When user starts bare metal instance with Cinder volume, Nova orchestrates the communication with Cinder and Ironic. The work flow of the boot process is as follows:

In the work flow above, Nova calls Ironic to get/set initiator/target information (4 and 6) and also administrator calls Ironic to set initiator information (1) but currently Ironic has neither those information nor APIs for them.

1 | #pip install rbd-iscsi-client==0.1.8 -i https://pypi.tuna.tsinghua.edu.cn/simple |

1 | #cinder create 100 --display-name centos7-grub2-iscsi-pwd --image-id ea4266e4-456e-4fe0-91c9-a8c15dd2d2d6 --volume-type rbd_iscsi |

常见问题:

1 | #cat /data/docker/volumes/ironic_ipxe/_data/78\:ac\:44\:04\:f1\:a9.conf |

1 | #!ipxe |

bs=16k, iodepth=1

吞吐

| 顺序读 | 顺序写 | 随机读 | 随机写 | 混合随机读写 | |

|---|---|---|---|---|---|

| 数据云盘sata | 246MB | 41.8MB | 291MB | 10.1MB | 7799kB/7894kB |

| 物理盘(sas*2 raid1) | 584MB | 1418MB/s | 127MB | 65.4MB | 40.2MB/40.1MB |

IOPS

| 顺序读 | 顺序写 | 随机读 | 随机写 | 混合随机读写 | |

|---|---|---|---|---|---|

| 数据云盘sata | 15.0k | 2550 | 17.8k | 618 | 476/481 |

| 物理盘(sas*2 raid1) | 35.7k | 86.6k | 7747 | 3990 | 2451/2450 |

bs=4k,iodepth=1

吞吐

| 顺序读 | 顺序写 | 随机读 | 随机写 | 混合随机读写 | |

|---|---|---|---|---|---|

| 数据云盘sata | 112MB | 14.1MB | 93.1MB | 2490kB | 2212kB/2238kB |

| 物理盘(sas*2 raid1) | 367MB | 372MB | 21.4MB | 15.0MB | 7252kB/7263kB |

IOPS

| 顺序读 | 顺序写 | 随机读 | 随机写 | 混合随机读写 | |

|---|---|---|---|---|---|

| 数据云盘sata | 27.3k | 3431 | 22.7k | 607 | 559/565 |

| 物理盘(sas*2 raid1) | 89.5k | 90.8k | 5223 | 3904 | 1770/1773 |

吞吐 bs=1024k,iodepth=128,size=50G,ioengine=psync

| 顺序读 | 顺序写 | 随机读 | 随机写 | 混合随机读写 | |

|---|---|---|---|---|---|

| iSCSI云盘sata 600G | 840MB | 85MB | 830MB | 140MB | 135MB/140MB |

| iSCSI云盘sata 4T | 840MB | 97MB | 870MB | 140MB | 135MB/140MB |

| 物理盘(sas*2 raid1) 600G | 520MB | 2650MB | 290MB | 150MB | 95MB/95MB |

| 物理盘(sata*1 raid0) 4T | 340MB | 140MB | 100MB | 120MB | 55MB/60MB |

| 物理盘(ssd*1 raid0) 800G | 280MB | 230MB | 420MB | 350MB | 175MB/180MB |

IOPS bs=4k,iodepth=128,size=50G,ioengine=psync

| 顺序读 | 顺序写 | 随机读 | 随机写 | 混合随机读写 | |

|---|---|---|---|---|---|

| iSCSI云盘sata 600G | 25.9k | 2663 | 20k | 799 | 602/609 |

| iSCSI云盘sata 4T | 26k | 3167 | 25k | 828 | 600/600 |

| 物理盘(sas*2 raid1) 600G | 82.0k | 92.3k | 901 | 1232 | 685/694 |

| 物理盘(sata*1 raid0) 4T | 5.8k | 7k | 327 | 276 | 142/148 |

| 物理盘(ssd*1 raid0) 800G | 47.9k | 6k | 46.4k | 39.6k | 20.2k/20.2k |

官方文档:https://docs.openstack.org/ironic/latest/install/configure-nova-flavors.html

在 Queen 版本中,Ironic 项目新增 Trait API,节点的 traits 信息可以注册到计算服务的 Placement API 中,用于创建虚拟机时的调度。添加 Trait API 后,注册到 Ironic 的裸机也可以通过 Trait API 注册到 Placement 资源清单中,最终支持裸机的部署调度。

本文我们实践通过 Placement 来完成裸机的调度,通过 Resource Class 来标识 ironic node 的资源类型,通过 Resource Traits 来标识 ironic node 的特征,还可以通过 resources:VCPU=0、resources:MEMORY_MB=0、resources:DISK_GB=0 来 disable scheduling。

1 | openstack flavor create --ram 262144 --vcpus 40 --disk 600 bms.test |

1 | [root@controller ~]# openstack allocation candidate list --resource VCPU=40 --resource DISK_GB=600 --resource MEMORY_MB=262144 --resource CUSTOM_BAREMETAL=1 |

1 | [root@17471e0f8de8 sh1]# openstack allocation candidate list --resource CUSTOM_BAREMETAL=1 |

1 | #openstack baremetal node create --driver ipmi --name BM01 |

当前许多 ironic node info 都是 None,需要后续继续更新。

1 | openstack baremetal node set 5e10c067-c0c6-48be-ac6e-749a70045b97 \ |

1 | openstack baremetal node set 5e10c067-c0c6-48be-ac6e-749a70045b97 \ |

NOTE:IPMI Driver 官方文档

1 | openstack image list |

1 | neutron net-create ironic_deploy --shared --provider:network_type flat --provider:physical_network physnet1 |

1 | openstack baremetal port create 78:ac:44:04:f1:a9 --node 5e10c067-c0c6-48be-ac6e-749a70045b97 |

NOTE:部署裸金属实例成功之后 PXE 网卡的 MAC 地址会被绑定到对应的 Tenant Network Port。

1 | $ openstack baremetal node set 5e10c067-c0c6-48be-ac6e-749a70045b97 --resource-class BAREMETAL |

1 |

|

NOTE:这个操作需要较高的 Placement API 版本 <= 1.17

1 | openstack baremetal node set 5e10c067-c0c6-48be-ac6e-749a70045b97 \ |

1 | openstack baremetal node set 5e10c067-c0c6-48be-ac6e-749a70045b97 --property capabilities='boot_mode:uefi' |

1 | $ openstack baremetal node validate e322f49a-ad50-468d-a031-29bde068c290 |

NOTE:这里出现了 4 个 False,但没有致命影响,其中 bios、console 是因为我们没有提供相应的驱动,属于可选项。而 boot、deploy 在 Nova Driver for Ironic 的环境中是无法通过验证的。

1 | # To move a node from enroll to manageable provision state |

1 | [root@controller ~]# openstack baremetal node show 5e10c067-c0c6-48be-ac6e-749a70045b97 |

1 | [root@baremetal ~]# openstack network agent list |

参考:https://docs.openstack.org/ironic/stein/admin/boot-from-volume.html#storage-interface

裸金属存储架构

存储

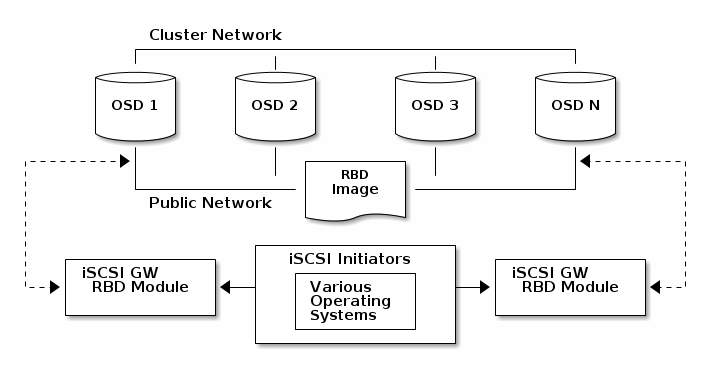

ceph iscsi 集群架构

PXE 是描述客户端-服务器与网络引导软件交互的行业标准,并使用 DHCP 和 TFTP 协议。本指南介绍了一种使用 PXE 环境安装 Clear Linux OS 的方法。

名为 iPXE 的 PXE 扩展新增了对 HTTP、iSCSI、AoE 和 FCoE 等协议的支持。iPXE 支持在没有内置 PXE 支持的计算机上使用网络引导。

要通过 iPXE 安装 Clear Linux OS,必须创建一个 PXE 客户端。图 1 描述了 PXE 服务器和 PXE 客户端之间的信息流。

看一下 ironic dnsmasq配置

1 | #cat /etc/kolla/ironic-dnsmasq/dnsmasq.conf |

*With direct deploy interface, the deploy ramdisk fetches the image from an HTTP location. It can be an object storage (swift or RadosGW) temporary URL or a user-provided HTTP URL. The deploy ramdisk then copies the image to the target disk. *

1 | openstack baremetal node create --driver ipmi --deploy-interface direct |

整理的常用mysql排查命令

mysql 8.0

1 | ## 当前运行的所有事务 |

注意这种map的嵌套的形式,make只初始化了map[string]T部分(T为map[int]int),所以下面的赋值会出现错误:

错误用法

1 | test := make(map[string]map[int]int) |

正确用法

1 |

|

常用用法

1 | test := make(map[string]map[int]int) |